Learning curve (machine learning)

This article provides insufficient context for those unfamiliar with the subject. (March 2019) (Learn how and when to remove this template message) |

| Machine learning and data mining |

|---|

|

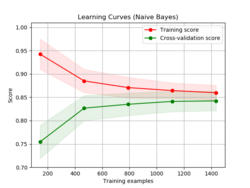

In machine learning, a learning curve (or training curve) plots the optimal value of a model's loss function for a training set against this loss function evaluated on a validation data set with same parameters as produced the optimal function.[1] Synonyms include error curve, experience curve, improvement curve and generalization curve.[2]

More abstractly, the learning curve is a curve of (learning effort)-(predictive performance), where usually learning effort means number of training samples and predictive performance means accuracy on testing samples.[3]

The machine learning curve is useful for many purposes including comparing different algorithms,[4] choosing model parameters during design,[5] adjusting optimization to improve convergence, and determining the amount of data used for training.[6]

Formal definition

One model of a machine learning is producing a function, f(x), which given some information, x, predicts some variable, y, from training data [math]\displaystyle{ X_\text{train} }[/math] and [math]\displaystyle{ Y_\text{train} }[/math]. It is distinct from mathematical optimization because [math]\displaystyle{ f }[/math] should predict well for [math]\displaystyle{ x }[/math] outside of [math]\displaystyle{ X_\text{train} }[/math].

We often constrain the possible functions to a parameterized family of functions, [math]\displaystyle{ \{f_\theta(x): \theta \in \Theta \} }[/math], so that our function is more generalizable[7] or so that the function has certain properties such as those that make finding a good [math]\displaystyle{ f }[/math] easier, or because we have some a priori reason to think that these properties are true.[7]:172

Given that it is not possible to produce a function that perfectly fits our data, it is then necessary to produce a loss function [math]\displaystyle{ L(f_\theta(X), Y') }[/math] to measure how good our prediction is. We then define an optimization process which finds a [math]\displaystyle{ \theta }[/math] which minimizes [math]\displaystyle{ L(f_\theta(X_, Y)) }[/math] referred to as [math]\displaystyle{ \theta^*(X, Y) }[/math] .

Training curve for amount of data

Then if our training data is [math]\displaystyle{ \{x_1, x_2, \dots, x_n \}, \{ y_1, y_2, \dots y_n \} }[/math] and our validation data is [math]\displaystyle{ \{ x_1', x_2', \dots x_m' \}, \{ y_1', y_2', \dots y_m' \} }[/math] a learning curve is the plot of the two curves

- [math]\displaystyle{ i \mapsto L(f_{\theta^*(X_i, Y_i)}(X_i), Y_i ) }[/math]

- [math]\displaystyle{ i \mapsto L(f_{\theta^*(X_i, Y_i)}(X_i'), Y_i' ) }[/math]

where [math]\displaystyle{ X_i = \{ x_1, x_2, \dots x_i \} }[/math]

Training curve for number of iterations

Many optimization processes are iterative, repeating the same step until the process converges to an optimal value. Gradient descent is one such algorithm. If you define [math]\displaystyle{ \theta_i^* }[/math] as the approximation of the optimal [math]\displaystyle{ \theta }[/math] after [math]\displaystyle{ i }[/math] steps, a learning curve is the plot of

- [math]\displaystyle{ i \mapsto L(f_{\theta_i^*(X, Y)}(X), Y) }[/math]

- [math]\displaystyle{ i \mapsto L(f_{\theta_i^*(X, Y)}(X'), Y') }[/math]

Choosing the size of the training dataset

It is a tool to find out how much a machine model benefits from adding more training data and whether the estimator suffers more from a variance error or a bias error. If both the validation score and the training score converge to a value that is too low with increasing size of the training set, it will not benefit much from more training data.[8]

In the machine learning domain, there are two implications of learning curves differing in the x-axis of the curves, with experience of the model graphed either as the number of training examples used for learning or the number of iterations used in training the model.[9]

See also

- Overfitting

- Bias–variance tradeoff

- Model selection

- Cross-validation (statistics)

- Validity (statistics)

- Verification and validation

- Double descent

References

- ↑ "Mohr, Felix and van Rijn, Jan N. "Learning Curves for Decision Making in Supervised Machine Learning - A Survey." arXiv preprint arXiv:2201.12150 (2022).".

- ↑ Viering, Tom; Loog, Marco (2023-06-01). "The Shape of Learning Curves: A Review". IEEE Transactions on Pattern Analysis and Machine Intelligence 45 (6): 7799–7819. doi:10.1109/TPAMI.2022.3220744. ISSN 0162-8828. https://ieeexplore.ieee.org/document/9944190/.

- ↑ Perlich, Claudia (2010), Sammut, Claude; Webb, Geoffrey I., eds., "Learning Curves in Machine Learning" (in en), Encyclopedia of Machine Learning (Boston, MA: Springer US): pp. 577–580, doi:10.1007/978-0-387-30164-8_452, ISBN 978-0-387-30164-8, https://doi.org/10.1007/978-0-387-30164-8_452, retrieved 2023-07-06

- ↑ Madhavan, P.G. (1997). "A New Recurrent Neural Network Learning Algorithm for Time Series Prediction". Journal of Intelligent Systems. p. 113 Fig. 3. http://www.jininnovation.com/RecurrentNN_JIntlSys_PG.pdf.

- ↑ "Machine Learning 102: Practical Advice". Tutorial: Machine Learning for Astronomy with Scikit-learn. https://astroml.github.com/sklearn_tutorial/practical.html#learning-curves.

- ↑ Meek, Christopher; Thiesson, Bo; Heckerman, David (Summer 2002). "The Learning-Curve Sampling Method Applied to Model-Based Clustering". Journal of Machine Learning Research 2 (3): 397. http://connection.ebscohost.com/c/articles/7188676/learning-curve-sampling-method-applied-model-based-clustering.

- ↑ 7.0 7.1 Goodfellow, Ian; Bengio, Yoshua; Courville, Aaron (2016-11-18) (in en). Deep Learning. MIT Press. pp. 108. ISBN 978-0-262-03561-3. https://books.google.com/books?id=Np9SDQAAQBAJ&q=deep%20learning%20goodfellow&pg=PA108.

- ↑ scikit-learn developers. "Validation curves: plotting scores to evaluate models — scikit-learn 0.20.2 documentation". https://scikit-learn.org/stable/modules/learning_curve.html#learning-curve.

- ↑ Sammut, Claude; Webb, Geoffrey I. (Eds.) (28 March 2011). Encyclopedia of Machine Learning (1st ed.). Springer. p. 578. ISBN 978-0-387-30768-8. https://books.google.com/books?id=i8hQhp1a62UC&q=neural+network+learning+curve&pg=PT604.

|